Azure Build Health AI-Generated Summary

Azure Build Health is a per-deployment testing tool used by Microsoft’s Azure’s Engineers.

Azure teams spend on average 6+ hours a week investigating and resolving issues pre-deployment. Our team was tasked with lowering this cost.

We also looked for opportunities to reduce post-deployment downtime, by surfacing key insights earlier in the process

To reduce manual toil and install better checkpoints before deployments, I worked to integrate Copilot (AI) into Azure Build Health and create a “single pane of glass” for our users.

Persona

Release Manager

Our primary persona is the Release Manager. This role is a rotational responsibility held by Senior Developers.

Core Goals

Ensure their team’s releases pass all quality checks, have low risk and are ready to work with the deployment approval team

Pains

Excessive manual effort to investigate issues

Hard to assess the risk of the release

Costly to prepare the documentation needed for deployment approval team

First iteration

In the first iteration we designed the Summary tab to have swim lanes which would visualize each checkpoint in the testing process. We also incorporated incidents, bugs, and task tracking to help with troubleshooting problems.

Initially, we partnered with a small set of teams. The Summary view was designed to fit their very specific needs. As more teams onboarded, it became clear the experience needed to evolve.

The Result

The MVP had a low adoption rate because it did not account for the diversity of Azure teams processes.

Research & Strategic Redesign

Research

Goals

Understand why the Summary view was being under-utilized

Increase usefulness for release managers

Identify additional opportunities to leverage Copilot AI Integration

Methodology

8 semi-structured interviews

2 current users

6 non-users

Findings

The swim lanes were confusing:

They lacked actionable insights in many situations.

Different teams have different release structures. The swim lanes did not account for this variability.

Additional opportunities were discovered to reduce manual effort:

Documentation and pre-deployment checks still needed to be gathered manually before going to the deployment approval team.

All teams needed to complete these checks no matter their release structure.

The information on the page was too detailed.

The incidents, bugs, and tasks tables were too low level.

Release managers wanted to see the overall health of their release in a single page with actionable high level insights.

Design Changes

During the course of this new experience, we continued to validate with ~10 users.

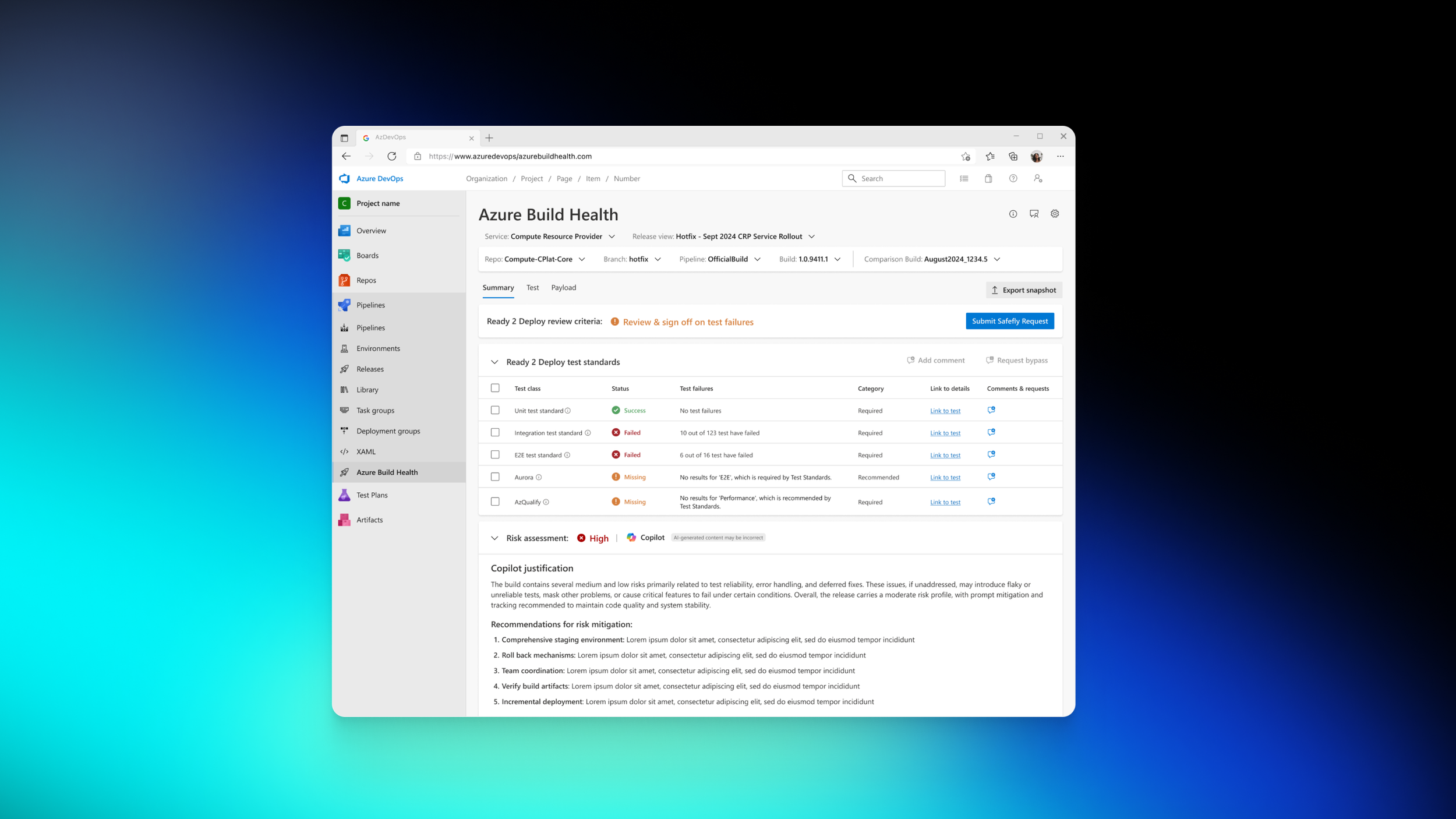

Using AI to assess risk

Utilizing Copilot, I designed an experience that would up-level high risk items and give a high level summary of the release. This gives the release manager a clear and actionable next step.

During our validation we heard that users wanted opportunity to edit Copilot’s assessment. I designed interactions that would allow the release manager to take control over the output.

Getting deployment approval

To reduce confusion

I designed a clear signal of the release readiness with possible next steps.

I removed the swim lanes and replaced them with a section that gave a high level summary of required checkpoints.

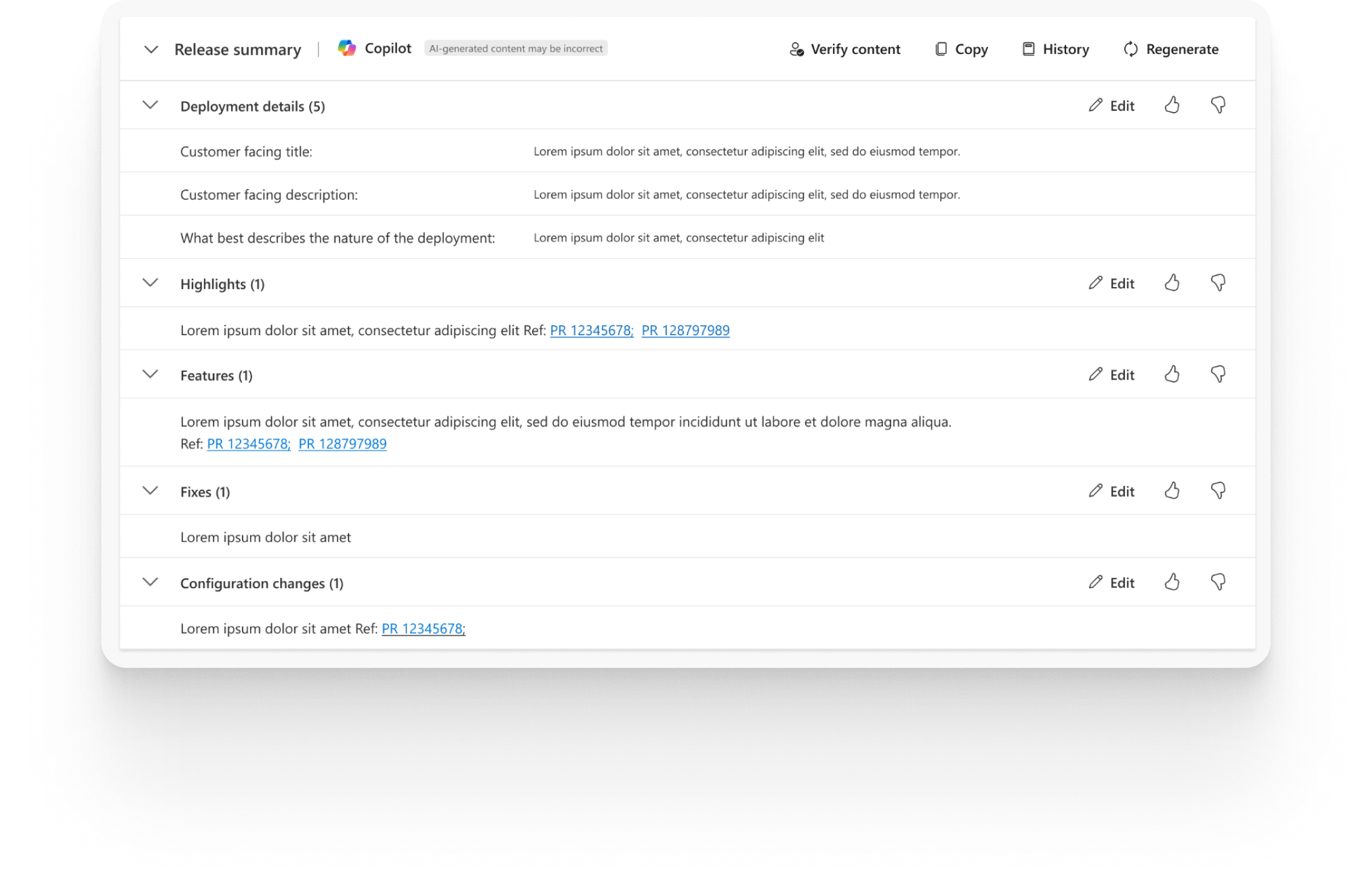

Preparing for Deployment

Once a release is ready, each team needs the same set of details for head to deployment. Instead of release manager manually gathering this information, we leverage Copilot to provide the information instead.

Using AI to reduce manual toil

For each release cycle the release manager would have to manually gather a specific set of information before deployment.

To remove the time it takes to gather each item, we leveraged Copilot. I designed an experience that would automatically populate the required information for deployment.

Quantifiable results

Following the release of the new Summary Tab, we achieved a 60% increase in usage.

We reduced the time spent compiling information for deployment from more than an hour to under 15 minutes.

We reduced time spent evaluating risk to from 2-3 days a week to less than 1 hour.

Next Steps

Improve Trust:

Create a feedback loop to the Copilot development team to improve AI output quality.

Increase the amount users can modify the AI output further .

Educate Users:

Develop and lead a Monthly Brownbag series to educate Azure teams and drive deeper utilization of the new features.

Monitor Engagement:

Continue to monitor user engagement to ensure better quality in deployments and get closer to 0% downtime.